Howdy. My name is Chris Punches and I’m here to talk to you a little about the planning around the Deployment Phase and Maintenance Phase in the Software Development Lifecycle.

# BEst Practices – I want to Believe

To preface, over the years I have seen good habits that save lots of work for everybody, and I’ve also been brought in a time or two after damage has already been done by bad habits — often either for the sole purpose of cleaning it up or making recommendations on what to do. I’ve also dispelled some bad habits that “everyone was doing” and those are plentiful in the industry as well. Basically, I’m a software, systems and infrastructure janitor, and I try to share what I learn where I can or document my own learning for others.

Let’s talk about the SDLC, but let’s remove the SD from it

So, for this example I’m going to demonstrate by removing the parts we already know about from the SDLC: The actual design and development of the application. While it would seem at first glance to be important to talk about software development in a talk about the Software Development Lifecycle, that part of the lifecycle actually so dominates most environments that conversation about the rest of the lifecycle can get drowned out:

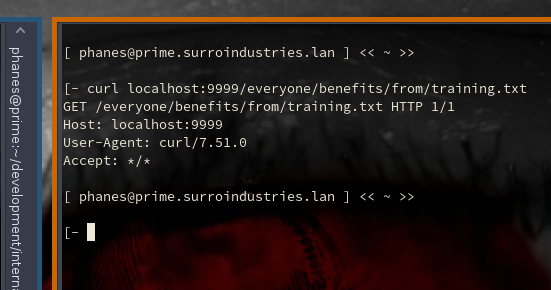

In this example, I want an HTTP ECHO SERVICE that will simply return 200 to a browser along with the headers supplied in the original request. We’re going to skip design and coding, and omit getting it in version control but assume it will be and that all best practices in software engineering were followed up to this point.

Note: This will nicely compliment my proxy discovery tool that I’ve recently created. It allows you to verify that a proxy on the list is not tagging users with an injected header (something that will certainly compromise the identity of users of that proxy if not checked for before using it).

So in this case I got kind of lucky right after I started and found that the boost library documentation in C++ has an example of a very small HTTP server, that I just kind of hacked up to regurgitate whatever request was supplied to it instead of sending an HTDOC root path and doing all that fun stuff.

Then an hour of goofing around with gitignore, building the readme, creating the public repo, and we’re at about the point that too many folks stop developing because they think software development only involves the development of software. I actually enjoyed building this so much that I’m going to host it as a permanent service at, say, proxycheck.silogroup.org and I’ll put the source here.

# How to get from “it compiles” to “I GET PAID TO DO THIS”

So here’s the meat of what I’m writing about. The software itself is only one piece of a much larger set of interacting components in your SDLC, and some of those components are the people involved, as well as the time they spend on it, and all the things people have to do to coordinate with that in an operations environment. If I were, say, hosting it in an organization (which I am, SILO and SURRO), then I would have considerably more things to consider than if I were just testing it on a VM or showing a friend.

That’s probably all some folks are used to besides a “sudo make install”, which you can get by with on a disposable machine, but not for actually hosting a service reliably, and will get you shot if you do in enterprise. That’s almost as bad as compiling on one machine and just copying the assembly over (READ: PREPROCESSOR, COMPILER, ASSEMBLER, LINKER, LOADER).

If you are out there reading this and writing software, then definitely don’t stop here because this is where it gets cool.

# So how do we get there knowing at least *someone* will want to:

- make updates to this service’s source code that I will want reflected in production

- update the installed version to newly compiled versions after that

- troubleshoot it in familiar, reliable ways when it breaks (assume bugs exist)

- report on the assembly metadata as supplemental process to that

- control the virginity of files associated with that piece of software (hehe i said ‘virginity’)

- control the service’s state, particularly during installation, upgrades, and removal of the software (too many people forget that last one and end up breaking their systems when they’re first learning stuff — think: library conflicts).

These are great initial considerations if you actually care about what you’re doing or if what you’re doing involves other people.

# Enter the OS (+Userland)

First you have to understand that you’re controlling a piece of software’s lifecycle, and that software requires a platform: The Operating System. It is the platform upon which software runs.

This is where alot of people get tripped up because it’s easy to forget what the purpose of things are in complex systems after we’ve seen them for a while and don’t use them.

The operating system is the interface between the user and the hardware. Everybody knows that.

Here’s what alot of people don’t know though: It consists of a kernel or userland components.

Userland is everything on the OS besides the kernel.

Userland is divided up into three categories: (on Linux Systems)

- The C standard library,

- Low-level system components,

- and user applications.

It is similar on Microsoft and Apple systems. System daemons running, say, systemd, logind, PulseAudio, and other low level stuff fall into low-level system components. User applications will be things like your browser. Apache is a system service. Tmux is a user application. We’ve built a system service because:

- It’s always running.

- It’s meant to be a background process.

- It’s unix-like.

Learn more about daemons, or services here. The criteria for a system daemon are well defined and understood and I have no need to justify them here.

You don’t handle these processes that meet the needs discussed in the previous discussion in the same layer as your application if your operating system will be running other software that other people write and support. But why? For the same reasons you don’t leave a mess in the kitchen when you have roommates first of all, and in the case of organizations you’re saving time and money due to uniformity of process (oh shit now I have to justify why uniformity of process saves money — ya know what, fuck it — it does, just believe it for now) and reduced human error (it is a form of automation to behave in this manner but it is so common now that people don’t even realize it). But, mostly, it’s because of the importance of abstraction:

# Wtf is abstraction?

If you’re a developer, it probably means close to what you think it does, but is not an inherently development-centric term — it’s also an architectural term (and has a similar meaning in several unrelated contexts):

“In software engineering and computer science, abstraction is a technique for arranging complexity of computer systems. It works by establishing a level of complexity on which a person interacts with the system, suppressing the more complex details below the current level.”

So, in this case, there are some revised points of consideration to point out that are solved by abstraction of the software artifacts:

- We want this service to be portable to a wide number of machines ( this allows a rational distribution model for this piece of software and also prevents conflicts with other software on the providing platform )

- We want to be able to deploy, update, and remove cleanly ( this reduces outages of my new service before, during, and after updates and installation )

- We want the above to be done in a uniform manner to other software operations on those same machines ( this reduces human process surrounding unit management on the target platform, allowing one process to exist for all software in a way that’s able to be controlled and improved; this also reduces human error by natural consequence of that, reduces the amount of support training needed on this service, etc. )

- We want to minimize the amount a human hand has to touch the unit or the platform serving the unit. Be easily updateable without potentially breaking things or creating inconsistency in the environment we’re installing to –not touching too many “things” during operations involving it ( this should include maintenance of whatever mechanism or automation is being used).

- We want to be able to audit both the assembly and metadata of all associated files during troubleshooting and analysis, even planning, and this metadata should include at minimum version number, dependency trees, install date, and more if we can get it ( while more complex than this, it basically allows us to know

So we need to Abstract it into a unit. How does this work?

Artifact abstraction in computer science is widely regarded as packaging the software, and sometimes in several layers of packaging. The definition of packaging is literally abstraction of a software unit.

If you’re in Javaland you’ve probably used Artifactory for this. If you’re not, you’ve probably used MSI if you’re on windows, or RPM if you’re on CentOS or another redhat derivative, or DEB/APT if you’re in Debian or Ubuntu. If you’re on Arch Linux you’re probably using pacman. Gentoo uses emerge (it technically is package management even if it doesn’t feel like it in gentoo). There are distro-independent package managers of varying qualities. I’ll not mention them because they generally tend to bring instability to systems due to bugs. If you’re on OSX apple has their own package management scheme, it’s actually so seamless that it is barely noticeable — however they also have alternative package managers like brew. If you’re on a freeBSD system, you’ll probably be using pkg_add which is built-in and portsnap. If you’re on SUSE it’s zypper unless that’s changed (been a few years).

In my case I want to target for Fedora since that’s the distro I use on all my servers currently.

In the case of a robust package manager like rpm or dnf, the process of installation generates associative metadata in a repo for every file in the package meeting every need presented but one.

I’ve actually rarely had to justify package management though, it’s really such an introductory concept and is so fundamental to software management that almost invariably doesn’t need justified.

This is not an operating system concept though — it’s actually a software engineering concept, a fundamental one, that the OS makes heavy use of.

Reiteration: The Linux Operating System does not provide a package manager. The project that develops the package manager provides it to the distribution maintainers who decide to include it or change to another package manager. This is easily confused because often the team for a package manager that is widely used by an OS works in close collaboration with that distribution provider.

On to Daemons and Services

So, I didn’t solve every need that I have for the actually very important “people” part of my SDLC: I need a uniform way to control service state for all system services, not just for this one piece of software, but for all software I put on that system. I don’t care if it’s in the base packages the distro provides, or if it’s something I put on after the fact — I’m building a complex set of IT Services out that I want to have clean management of and this daemon is no exception. Since in the case of SILO and SURRO the same people who manage the state of this service will also be managing any network services or anything else on the hosts, it’d be much better to have it all using one tool. If I don’t have a uniform state management facet of this service’s lifecycle, the silo staff and surro staff will be running around pulling their hair out, being well trained on linux systems, trying to figure out how to start which service with which methods. After about 10 or 20 disparate services like that, it can become almost unmanageable even if you take copious notes unless I allocate staff to only watch those services and nothing else (and I can’t allocate people to just OS maintenance because my application layer is deeply dependent on it and I need them to support that user application layer as my core organizational goal).

But first a word on service state.

# Service State is a Lifecycle Too

If your daemon is running, that’s a state. If it’s not, that’s a state. Here are all the states I care about:

- A Running Process,

- No Running Process,

- A boot-enabled process,

- A boot-disabled process.

Services are crazy simple when you look at service states. Just like processes.

Now sure, the application itself may have many states within it, an uncountable number of states — but those are up a layer in this model and I don’t care about them yet. At this point I care about the state of the service itself.

Oh, I should also point out– a consistent method for reporting on the state of the service is necessary as well, as during troubleshooting I don’t want to wonder at all which of these four states my process is in (note: not all of these states are mutually exclusive) if other services will depend on it (and they will in my case).

So how do you bring continuity to service state management?

I’m glad you asked, very large header text.

It starts by understanding how your software is launched.

In all cases, including Windows, and Linux, and OSX, and BSD, your server starts with a single process bootstrapped from BIOS/UEFI. That process is called init. The kernel itself executes init as the bootstrap mechanism with a hardcoded path to the init program. It gets complicated and divergent from here but basically init calls other processes. In the case of Fedora, it launches SystemD, which then starts the system’s services that allow it to become multiuser, and sets up various features that most people don’t dive very far into.

From there you log in, and do things. Kinda. I skipped over alot of stuff, but that’s the gist you need for this.

Once it’s able to handle users, I can log in and use it’s reporting and control tool, systemctl to:

- Start a service that is not running or stop a service that is running.

- Report on the service state of any service.

And there you have it.

So, if I want a uniform way to manage system services I would plug right into what’s bringing up the rest of the daemons running on that server. Even if I don’t — by proxy of another process I still am anyway — it is not something you can opt out of.

- We want to be able to control the service’s state cleanly.

And for the final point:

Oops, changes of service state with systemctl require root and I don’t want to give root to anyone. SO DOES INSTALLING PACKAGES.

This is a perfectly valid concern. As it should.

So, we’ve made it this far only for SILO security staff to blow the whistle on my design because SILO’s servers require more than one person to have access. What will I do?

Well, here’s where it can get a little complicated but it’s definitely a solved problem. Since I’m not incompetent I set up all SILO staff to have users in a shared group called “staff”. Granular access control is the point of usergroup administration.

Then, I divided the staff into users who can install software and other users who can manage service state. One subgroup is “installers” and one subgroup is “sysadmins”.

Then, I added two lines to a frequently misunderstood file called the sudoers file.

%installers ALL = NOPASSWD : /usr/bin/rpm

%sysadmins ALL = NOPASSWD : /usr/bin/systemctlAnd from that point forward, my staff who installs things has the access they need without root. And my staff who controls service state have the access they need, also without root.

Or,

I could have them use rpm to install to a shared system path that they do have access to, like /opt/SILO/RPM_ROOT and force approvals for service scripts, though, that can get very complicated quickly.

In my case, I opted for the sudoers entry because it’s very easy to manage and sudoers leaves an audit trail with logs that I have pumping into my monitoring system and SURRO pager.

Even better news:

Packages are as easy to build as whatever software I am packaging is. This is also usually automated, and usually in a CI-like system. Check out koji or git-build-rpm or Jenkins if you like getting your hands dirty with the build process. Having a package regenerated when I do a git commit is excellent as it can immediately reject if it doesn’t compile.

Systemctl is stupidly simple to use.

I hope that this report saves readers a great deal of money and time — from reallocated time spent automating things, to outage prevention, to general system stability and reduced error rates.

Implementation

In my case I’m doing a one-off so I won’t be using a CI model although I will be eventually for SURRO. With this package I’m going to just create the SystemD script, which is all of about 4 lines, build an RPM, install that, and then open the firewall for my new webservice. I unfortunately won’t have time tonight to do that though, so it’ll have to be tomorrow. This should cover all the important parts of the lifecycle you need to know — I don’t need to document very much about my service now since it’s all using tools that I’d not let someone be staff at surro without knowing how to use what I mean by that is that while the explanations and reasons behind these choices may have been rather complex, the practices that were mentioned as a result of that thinking are entrant level exposure.