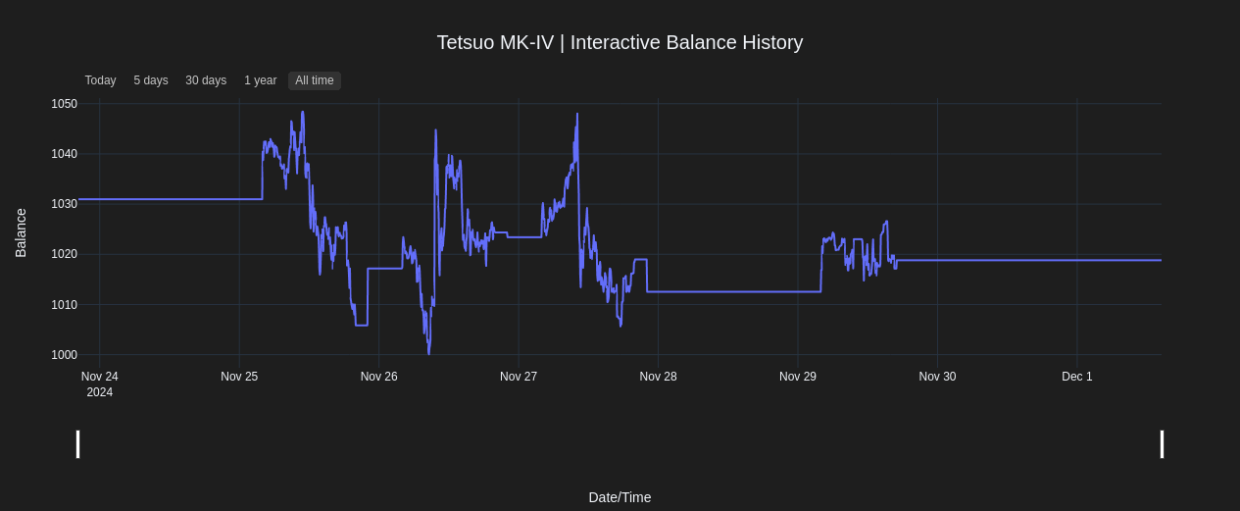

Well, it didn’t go well. Started at 1037.37, ended at 1018.81.

It could have been much worse, but, some of the metrics being used, and the way they were being calculated, and interpreted were naive. Still, it was a huge learning point.

For example, calculating optimal parameters for the forecast modeling, I’d been using a weighted directional accuracy metric, but, the metric when it went live was nonsensical and didn’t correlate strongly with actual directional accuracy over time. I just realized last night that this accounts for why forecasts for older dates during simulation runs decreased in accuracy rapidly going backwards in time.

So I spent a good 4 day weekend pounding away at it, and have something a little more realistic in place to observe through the week. My certainty is lower than ever about that, and, I’ve decided to stop posting metrics on the updates page until I can create reliable individual forecast accuracy averages higher than 60% over long periods of time, because it might end up at 60% for a period of 8 days, but if you add four more days to that it can drop to 45%, making the claim technically true, but useless for what we’re doing.

It’s a difficult project, and that recurring theme is really why — the numbers just don’t make a great deal of sense when analyzing its functional results, but very much make sense when building it and simulating it. It’s been an endless loop of clearly identifying very strong correlations between metrics and results that fall apart when the sample window slides.

I guess next check in will be on December 7th.